After converting a couple of legacy Ubuntu 6(!) VMs from ESXi vmdks to qcow2, in Proxmox I setup the vm guests and chose the SATA interface for the qcow2 images.

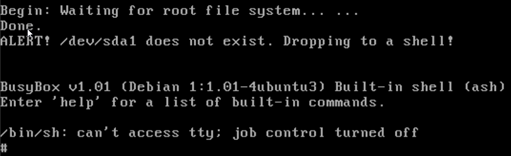

During boot up, grub was showing the error

"Begin: Waiting for root file system... ..."

And after a minute or two waiting.

"ALERT! /dev/sda1 does not exist. Dropping to a shell!"

and eventually it dropped into Busybox and unable to boot.

To resolve this:-

- change the guest vm interface to IDE (you need to edit the /etc/pve/qemu/<vm-id>.conf corresponding to your VM.

- Reboot – press the ESC key to load the grub menu

- In grub menu scroll to select the recovery mode and hit enter to boot

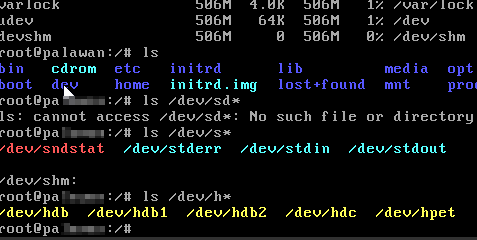

Then when you get dumped into Busy box again, you can now list the disks and partition names using ls /dev/h*

In the following example, the partitions were assigned as /dev/hdb /dev/hdb1 and /dev/hdb2 by Ubuntu.

In my case, the root file system has changed to /dev/hdb1, so all I had left to do is

- Reboot again – press the ESC key to boot into grub menu

- In grub menu scroll to select the recovery mode and press e to edit the command

- change the root device from /dev/sda1 to hdb1, press b to boot

linux /boot/vmlinuz-3.13.0-29-generic root=/dev/hdb1

Ubuntu will be able to find the kernel and complete the boot. But you will ended up in recovery mode and need to patch a few of things before rebooting properly the next time:-

- Edit /etc/grub.d/menu.lst and fix the root device entry, so the next time Ubuntu can boot from the correct root device.

- Swap partition – if your VM has a swap partition, most likely it has failed too and the device it has been renamed to /dev/hdb2 or /dev/hdb5, etc. You should be able to identity it using blkid. You may need to use swapon to re-activate it. See this guide for details.

- /etc/fstab – edit this so the mount and swap device name are updated too.

In the next reboot, your Ubuntu VM should find the root device and will boot up normally.

######################################

2 April 2018

I found myself in a similar, but a more difficult situation again.

Using Clonezilla, I was cloning a VMWare Workstation VM to a Proxmox VM over the network using server-client mode.

After cloning, when I tried booting the Proxmox VM, the disks are not available, I was unable to see any disks even in recovery mode, neither with sata or scsi on Proxmox!

A helping tip https://www.linuxquestions.org/questions/debian-26/booting-to-initramfs-gave-up-waiting-dev-disk-by-uuid-xxxx-does-not-exist-4175585901/ showed me the way.

- Boot up in Clonezilla (or closest Linux distro)

- Jump into command prompt (Clonezilla) or rescue mode (Ubuntu distro)

- Reinitialse the initramfs using the following commands (note I missed out the depmod command from the original article):

mount /dev/sda1 /mnt mount --bind /dev /mnt/dev mount --bind /proc /mnt/proc mount --bind /sys /mnt/sys chroot /mnt depmod `uname -r` <---- I omitted this update-initramfs -u update-grub reboot

Afterwards the cloned VM was able to see the drive and became bootable. I guess by chroot the mounted partition and updated the initramfs did the trick.

You may have to fix the swap partition as aforementioned if necessary.

No Comments Yet